Know when your AI stops sounding like your brand.

Real-time brand voice monitoring for production LLMs. Get alerted before your customers notice drift.

LLMs Have Measurable Personalities

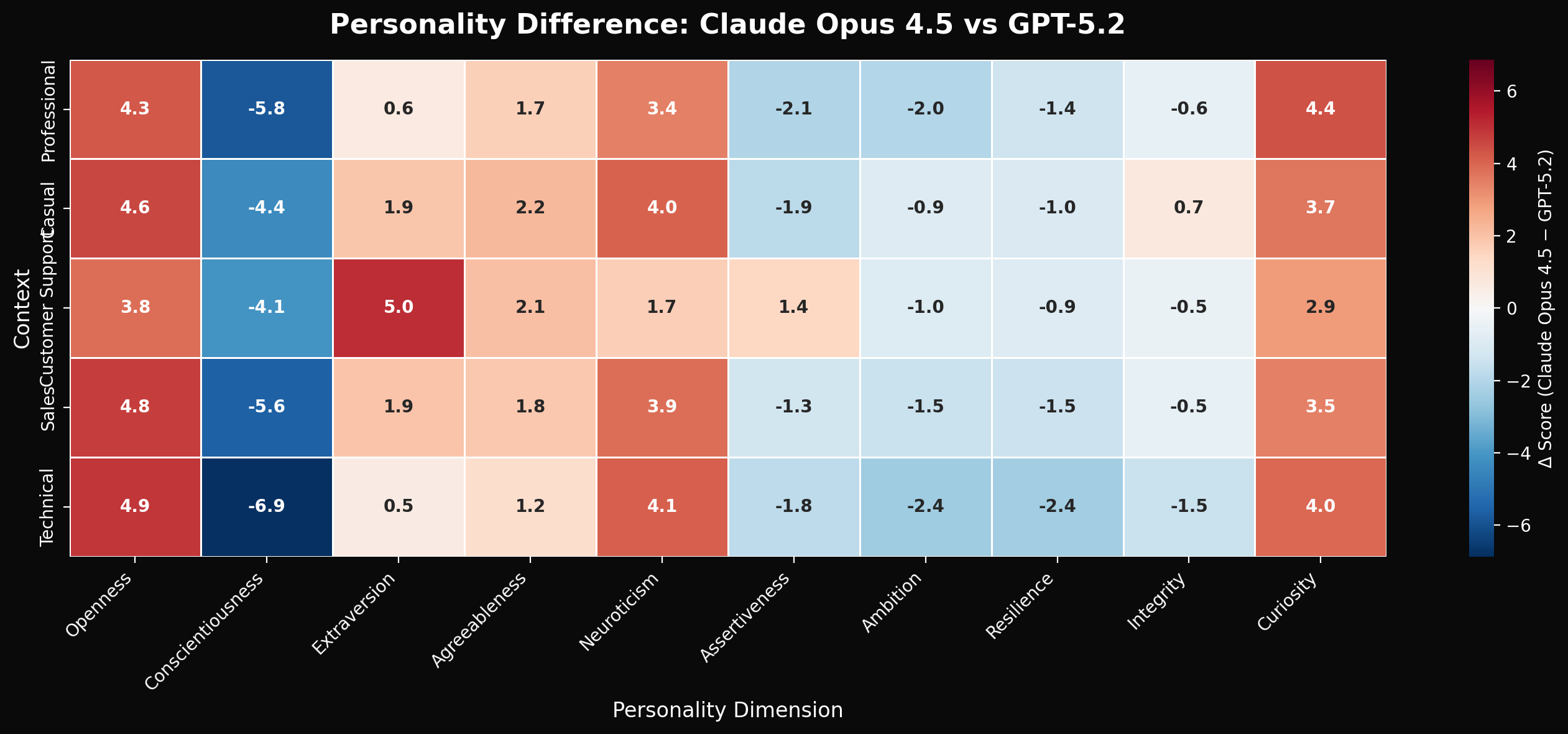

We benchmarked GPT-5.2 and Claude Opus 4.5 across 4,368 personality evaluations. The differences are statistically significant—and practically meaningful.

Personality differences by context. Red = Claude higher, Blue = GPT higher.

See It In Action

Watch how Lindr monitors personality dimensions in real-time as your LLM responds.

Why Lindr?

Most monitoring tools track if your model works. We track if it sounds like your brand.

Simple Integration

Define your brand voice, connect your LLM, and start getting alerts. One line of code.

import lindr

client = lindr.Client(api_key="lnd_...")

# Define your target personality

persona = client.personas.create(

name="Support Agent",

dimensions={

"agreeableness": 85,

"empathy": 80,

"assertiveness": 65,

}

)

# Evaluate your base model

baseline = client.evals.batch(

name="llama-3.2-8b",

persona_id=persona.id,

samples=base_model_responses,

)

print(f"Baseline drift: {baseline.avg_drift}%")Protect Your Brand Voice

Get alerts when your AI drifts from the voice your customers expect.

Customer Support Bots

Know immediately when your support bot becomes less empathetic or more robotic. Fix it before customers complain.

AI Assistants

Model updates can silently change your assistant's tone. Get alerted when friendliness drops or assertiveness spikes.

Multi-Model Deployments

Different LLMs have different personalities. Ensure consistent brand voice across GPT, Claude, and open-source models.

Never let your AI go off-brand.

Try the demo. See your AI's brand voice in seconds.